We’re Pip and Jennifer, user researchers working at HMRC’s digital delivery centre in Newcastle. We’ve been helping scrum teams use A/B testing to improve HMRC’s digital services for our users.

What is A/B testing?

A/B testing is when two versions of a page or journey are split between a random sample of website users; one being A, the other B. We measure which performs better by monitoring key metrics, like unique page views or exit rate, and comparing the results between the two versions.

Using A/B testing means we can try out small content and visual design improvements in real-time with real users on beta or live services. We can fail fast and learn quickly - one of the core agile principles.

The A/B testing working group

We set up a cross-HMRC working group to help scrum teams design and run A/B tests on the services they’re building. Working group members include user researchers, behavioural insight and research experts and performance analysts from HMRC’s Digital Data Academy. The mix of roles in the group ensures we have the expertise and experience to design better A/B tests.

The working group helps scrum teams to:

- identify opportunities for A/B tests

- design a hypothesis

- build a test

- analyse the results

As the members of the group are dotted around the country, we have a video call every 2 weeks to manage the ongoing A/B tests. We always try to meet up in person every 3 months to upskill each other, share what we’ve learnt and agree our priorities.

What we’ve done so far

We’ve completed 8 A/B tests so far and we’re always happy to hear about ideas for more from other service building colleagues. We’ve created technical guidance for front-end developers and testers which is continually iterated as we learn more about building tests. We’ve also created templates to help user researchers design A/B tests based on user needs and ensure the right metrics are monitored.

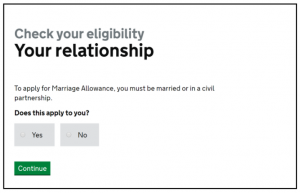

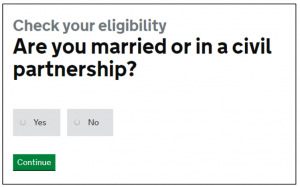

One of the A/B tests we conducted was on the Marriage Allowance service. We hypothesised that reducing the content on the eligibility checker would make the pages easier to understand and not impact the number of users completing the service.

The new design decreased the:

- number of words in the journey by 43%

- reading time by 44%

- reading age of the content

As an added bonus to the design improvements above, the A/B test proved that the redesigned content increased the completion rate of the eligibility journey by 1.9%. So after presenting the service owners with all the evidence from the test, we implemented these changes to the Marriage Allowance service.

Sharing knowledge

A highlight for us was presenting our work at a GDS cross-government performance analyst meet up in Sheffield. It was great to share what we’ve done so far with wider government and build interest in our work.

Our future plans

We want to work with more scrum teams to improve services and share what we’ve learnt with other government departments. Look out for our future blog posts about the A/B tests we’ve run and how we’re embedding A/B testing within agile service development at HMRC.