Hello, I’m Christine Hardy, a Lead User Researcher based in our Newcastle digital delivery centre, and this is Richard Watts also a User Researcher. Our job is to lead on experimentation and research for all the live HMRC digital services, making sure we offer the best experience for our users.

We’ve recently been doing an exciting cross-channel experiment on our webchat service. If you're involved or interested in user research, you might like to hear about this and how our team's approach has helped optimise live services for users.

Webchat is here to support users

Webchat allows customers who may need help using our online services to communicate with us in real time using easily accessible web interfaces. It’s just one of the ways users can get help on some of our services, and over recent years, it has grown in popularity. Online users can ask for help when they are in their Personal Tax Account, with advisers handling their queries via an online chat.

Understanding goals and creating a hypothesis

HMRC's Customer Services function (our stakeholders) wanted to improve their online service support, in order to help more of our customers get what they need without having to pick up the phone. To do this, they needed to understand more about the effectiveness of the webchat and whether it should be more widely offered. From this, we identified the ‘problem’ they were trying to solve and created our hypothesis:

We predict that offering users webchat within an online journey,

will mean that users are more likely to stay in the digital channel if they need help

and result in less calls and Deskpro queries

Our approach to experimentation

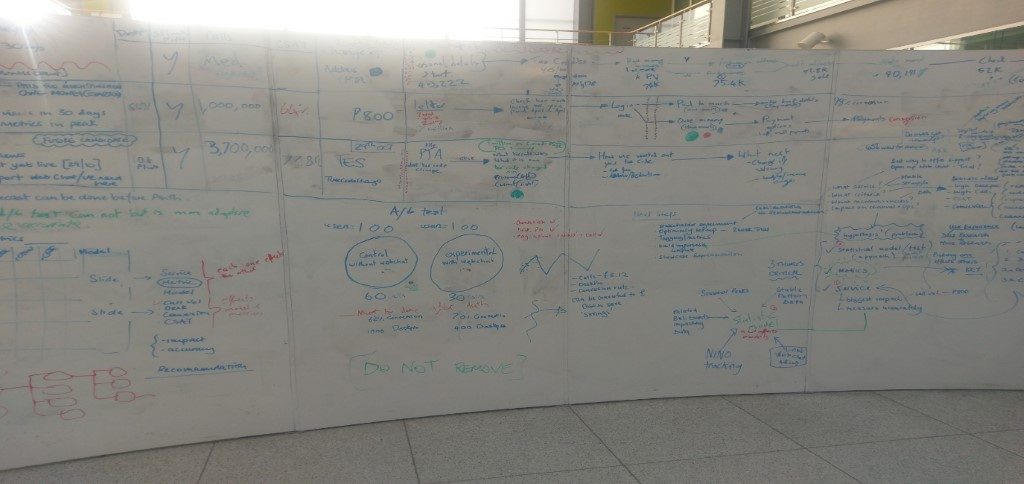

We identified three elements to the experiment:

- which HMRC digital service should we use in the experiment?

- what statistical model is best?

- what metrics do we monitor?

We needed to find out which of the above was most important, as prioritising one of these affects the other elements. For example, if we selected a particular service, this might restrict the types of metrics and, in turn, the statistical model used. Through speaking to people, it became clear the priority was to get the right metrics and measures in place first.

So, we set about agreeing our primary metrics:

- number of calls

- number of Deskpro (technical online help and support) queries

And our secondary metrics:

- number of clicks on webchat

- the customer satisfaction score of service

- the customer satisfaction score of webchat

- percentage of users who call within 24 hours of using a digital service

- views of “Contact us” pages on GOV.UK

We had already identified with our stakeholders that reducing call volumes was the main driver, so this would help determine which service and which model was best to use. To help identify the service, we journey-mapped some options and considered lots of factors such as traffic volumes and planned changes.

We recognised that we needed an experiment that:

- gave the best chance to observe an effect

- met the level of call advisers available

- would not disrupt a service

- had no critical business events that would affect results

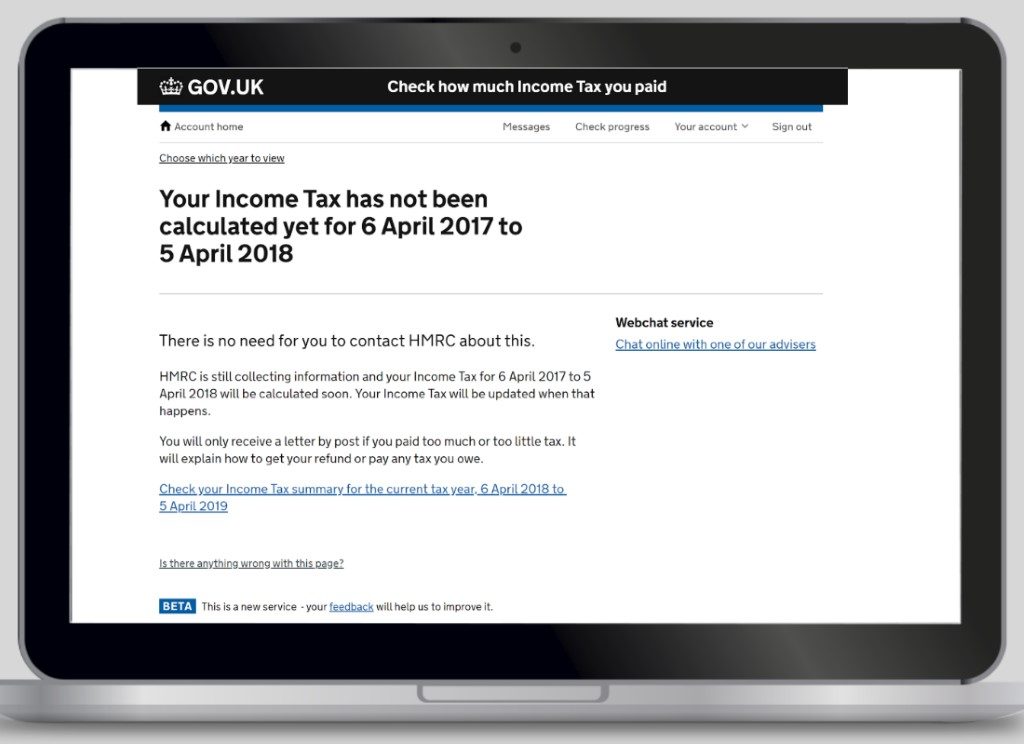

We decided the P800 service met these criteria best. This service allows PAYE taxpayers to view the amount of tax they have paid and the amount of tax they should have paid. If this differs then they need to either pay a shortfall or are due a refund.

Once we’d agreed on the metrics and the service, the final consideration was which statistical model to use. We looked at time series models versus experimental models as this limits what aspect of a services journey can be measured and to what degree of accuracy. We agreed a randomised control, independent measures experiment was the best approach to use.

Designing the experiment

To help design the experiment we needed to understand:

- the P800 user journey

- the user scenarios

- what the web pages looked like now

- what the web pages will look like during the experiment

- how to make the experiment fair, to test our hypothesis

Mapping user journeys allowed us to see all the user scenarios. We considered broad categories of users such as those who had underpaid tax, overpaid tax or paid the right amount, as well as those who have not yet had a P800 calculation.

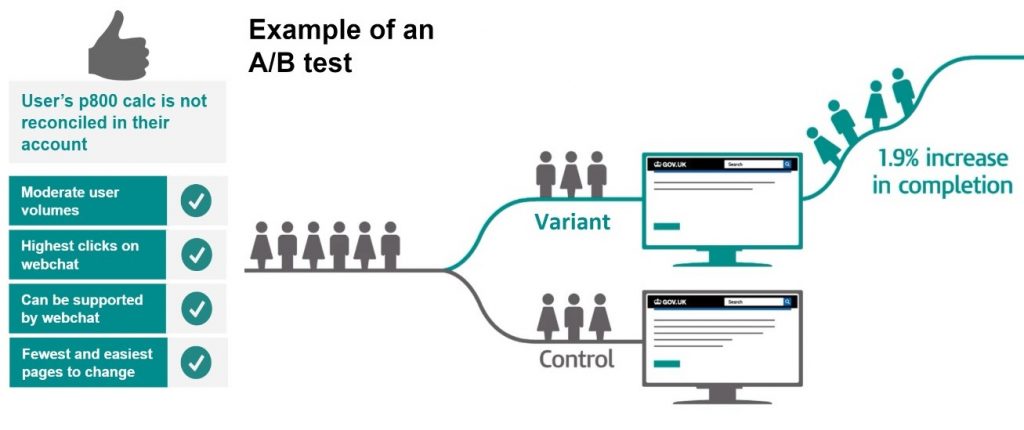

We then had to select the best journey to run the trial, so we used Google Analytics to understand user volumes and number of webchat clicks. We did thematic analysis of webchat transcripts and reviewed tech support transcripts. Finally, we assessed the technical effort and impact on the front-end, and identified the not-yet-calculated journey was best for an A/B test.

We designed and set up the A/B test, the ‘control’ group were offered webchat:

And for the ‘variant’ group, webchat was turned off (not offered) and 50% of users were randomly shown this page.

We then had to:

- work out the number of HMRC customer services webchat agents needed to get statistical significance based on conversion to calls from digital, expected effect and 0.05 alpha level

- get buy-in from stakeholders and engage webchat agents

- understand the impact on operational resource planning

- decide what other qualitative research was needed

- design the experiment and set it up in Optimizely (A/B testing tool)

- tag all metrics

- start the experiment

We supported the A/B test by doing additional quantitative and qualitative user research to find answers to our research objectives, which were to:

- understand the impact webchat has on user experience and satisfaction

- understand how webchat affects demand for support across Deskpro and telephony

- identify improvements to our ways of working.

We did interviews with users, a diary study with advisers, analysed webchat transcripts and analysed all of the experiment data too.

By using this mixed methodology approach we could understand more about what is happening and why it's happening, and get richer insight.

Engaging our stakeholders

Everything we did, we did collaboratively with our stakeholders. Before running the experiment we reassured them we could:

- target the unique URL (a specific address on the web)

- tag users who view either the control or variant, and then link this to Google Analytics and call centre data

- automatically turn the experiment on and off at certain times of day

- pause the experiment if there are any critical operational issues

Our results

We ran the experiment for 4 weeks (Monday to Friday from 8am to 8pm) and over this period 3,794 webchat queries were handled by advisers allocated to the experiment. We did a period of intensive analysis and presented insights back to our stakeholders.

We found overall that:

- 78% of webchat users would not need to contact HMRC again about their query

- 18% would need to contact HMRC again, but would do so on webchat

When webchat is available:

- tech support tickets raised reduce by 43.47%

- users find webchat quicker and easier than phoning up

- 89% of users who visit the pre-chat form go on to initiate a webchat

- P800 users can get their complex queries resolved on webchat

The experiment also revealed interesting insight. We found our users:

- use webchat when multi-tasking

- prefer doing webchat during working hours, it's less disruptive

- were 84.2% satisfied with the webchat service they received

- are seeking in-channel support, as 8.63% clicked link to start a chat

- create duplicate contact, as 60%+ who asked for technical support also clicked on webchat

- weren’t aware HMRC’s webchat was with a real person

- like the transcripts option, as it’s a useful record to go back to

We found our digital inclusive users:

- with dyslexia – need better features (for example coloured text, sound bites)

- with anxiety/depression – prefer webchat (as less socially demanding)

- English as an additional language - prefer webchat as they can use Google translate

We found HMRC webchat advisers:

- prefer handling chats and can do multiple chats

- can help users to navigate easier and faster on webchat

- need better tools

Will we be doing more experiments?

Will we be doing more experiments?

Our findings proved that offering web chat will result in users staying online and having a better experience. So, it's great that all our findings are being acted on as part of HMRC’s review of its customer support model.

It's fantastic to see so many people passionate about placing users at the centre of what we do and optimising HMRC's services through experimentation. And, going forward, HMRC will continue to learn by doing lots more innovative experiments like this.

If you’re a user researcher and you want to learn more

There is a wealth of information out there to help you. We’ve listed some blogs below, or if you'd like to have a chat, just leave us a comment and we’ll get in touch. Plus we have User Researcher vacancies open at HMRC for application right now, so why not check this out and come and join HMRC’s digital team.

Christine Hardy & Richard Watts, User Researchers

Check out our current vacancies. They're updated regularly so worth keeping an eye on.

Now you can follow us on Twitter @HMRCdigital

To make sure you don't miss any of our blog posts, sign up for email alerts